Question: Can people be identified based on the sound of their farts?

Short answer: Yes

Long answer: With the advent of artificial intelligence, there are growing concerns about the ability of governments or other agencies to track our movements. Usually these concerns are based on face or voice recognition technologies. But the average person farts 13 times per day. Is this something that privacy advocates should be concerned about?

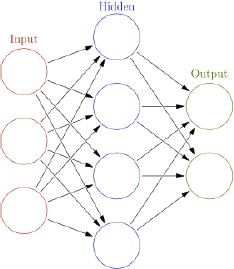

Any recognition technology must be based on examples, often many examples, and several members of the Invisible College were dedicated enough to contribute enough data to test the possible uniqueness of fart sounds. We then attempted to train a neural network to identify people based on their data. Neural networks come in many varieties, but we used a simple 3-layer architecture, consisting of an input layer (representing the fart sounds), a single hidden layer, and an output layer (the network’s guess about the farter’s identity). The training procedure adjusts the weights between the layers to optimize the accuracy of the output, and in this sense, it is loosely analogous to some kinds of (supervised) learning in real brains.

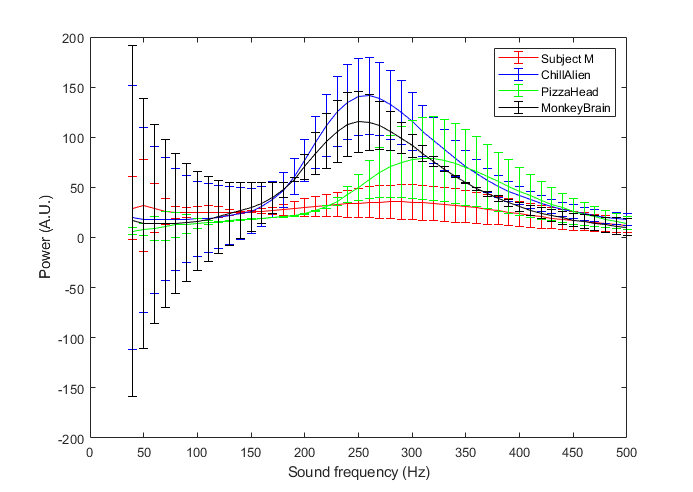

As with any algorithm, the performance is only as good as the data you give it, so there is the question of what to consider as the input. In our laboratory, audio is sampled at 44 kHz, which means about 100,000 samples per fart. Clearly, the dimensionality of this kind of data is vast relative to the quantity of data at hand, so we had to find a more compact way to present the input. An obvious solution would be to use the Fourier transform to represent each sound in terms of its constituent frequencies. Here are the mean power spectra for each subject’s farts:

The data show that most farts predominantly have power in sound frequencies between 200 and 400 Hz (the highly variable power at low frequencies reflects various sources of background noise and can be ignored). The spectral content of farts is the topic of a future post, but for now the important thing is that, while there are differences in the frequency content across individuals, there is also substantial overlap, which does not bode well for our efforts at classification.

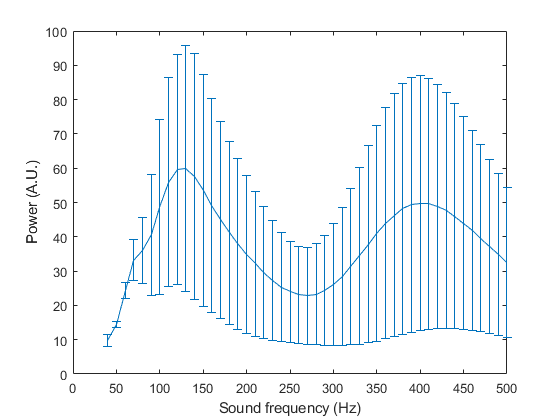

As an aside, we attempted at this point to increase the number of subjects by bringing on board a Whoopee cushion, which we thought would be a quick and easy source of data. Unfortunately, it turns out that the sound frequencies produced by Whoopie cushions are not actually very similar to those produced by human farts. They tend to alternate between very low frequencies (~100 Hz) and very high (>400 Hz), unlike biological flatulence. So we gave up on this approach, and focused on the real thing.

We trained a neural network with 10 hidden units, using a scaled conjugate gradient backpropagation algorithm to optimize the weights. Performance was measured in terms of cross-entropy on a testing set (15% of the data) that was not used during training. With this network, performance ranged from 44% correct to 74% on different runs, with an average accuracy of 63%. This is not bad, considering that chance performance is 25%. Adding more hidden units didn’t seem to help – we found 61% accuracy on average with 50 hidden units, and again 61% with 100 hidden units. Although it might be possible to do better with a different architecture, it does seem that the power spectrum is not the best input.

The problem of fart recognition is in some ways analogous to that of voice recognition – both are based on sound input, and the uniqueness of each individual’s data might be a consequence of their anatomy. In voice recognition, it is common to transform the power spectrum using the Mel-frequency cepstrum, which warps the frequency representations according to human auditory sensitivity. Why this would help with fart recognition is not immediately obvious, but we decided to give it a try. Happily, when we transformed the data this way, performance improved markedly: With 10 hidden units, accuracy ranged from 83% to 96%, with a mean of 88%. With 50 hidden units, performance averaged 93% and surpassed 98% on some runs. This seemed to be optimal, as adding more units had very little effect.

So the bottom line is that people can be identified quite accurately based on the sound of their farts. At present, we don’t have enough subjects to say how well the system scales, and it would probably be necessary to use more sophisticated algorithms to deploy a nationwide fart recognition system, in the unlikely even that anyone ever wanted to do that. At the same time, the fact that a neural network with less than 100 neurons was able to reach 98% accuracy suggests that a human, with many billions of neurons, should at a minimum be able to recognize the fart sounds coming from other humans with whom they spend a lot of time.